Back to main guide: Complete Guide To Gpt

The journey of Artificial Intelligence has been marked by several groundbreaking advancements, with Generative Pre-trained Transformers (GPT) standing out as a true revolution in natural language processing. Understanding The Evolution Of Gpt: From Gpt-1 To Gpt-4 And Beyond offers a fascinating glimpse into how these powerful ai models have transformed our interaction with technology. From rudimentary text generation to sophisticated multimodal reasoning, each iteration has pushed the boundaries of what machines can achieve, paving the way for increasingly intelligent and versatile applications.

See also: Complete Guide to Gpt, What is GPT? A Beginner's Guide to Generative Pre-trained Transformers, Prompt Engineering: Mastering GPT for Better Results.The Genesis: GPT-1 and the Dawn of Large Language Models

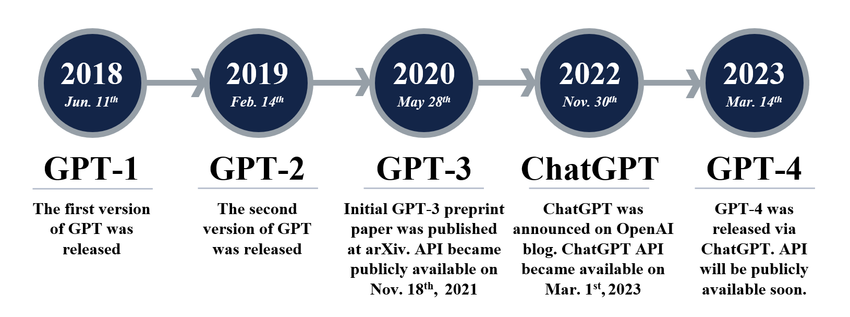

The story of GPT began with a bold new approach to language understanding, moving beyond traditional recurrent neural networks. OpenAI introduced GPT-1 in 2018, marking a significant milestone in the development of large language models (LLMs). This initial model demonstrated the immense potential of the transformer architecture for unsupervised pre-training on vast amounts of text data.

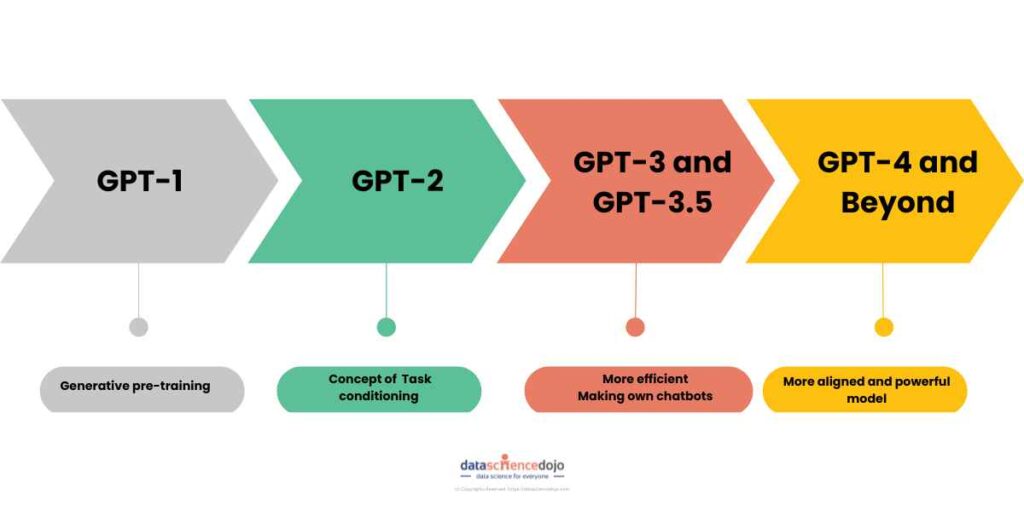

GPT-1 was primarily designed for generative tasks, showcasing impressive capabilities in areas like common sense reasoning and question answering after fine-tuning. Its architecture, based on a decoder-only transformer, was trained on the BooksCorpus dataset, comprising over 7,000 unpublished books. This foundational work laid the groundwork for the rapid evolution of GPT models that would follow, proving that a general-purpose model could learn a wide range of linguistic patterns.

The Birth of Generative Pre-trained Transformers

The core innovation of GPT-1 was its two-stage training process: unsupervised pre-training followed by supervised fine-tuning. During pre-training, the model learned to predict the next word in a sequence, effectively absorbing grammar, facts, and reasoning abilities from raw text. This process allowed the model to develop a robust internal representation of language.

Following pre-training, GPT-1 could then be fine-tuned on specific downstream tasks with minimal labeled data, a significant improvement over previous methods requiring extensive task-specific training. This adaptability quickly highlighted the potential for a single, powerful model to handle diverse natural language processing challenges. While limited compared to its successors, GPT-1 undeniably set the stage for the generative AI explosion.

Scaling Up: From GPT-2’s Breakthrough to GPT-3’s Paradigm Shift

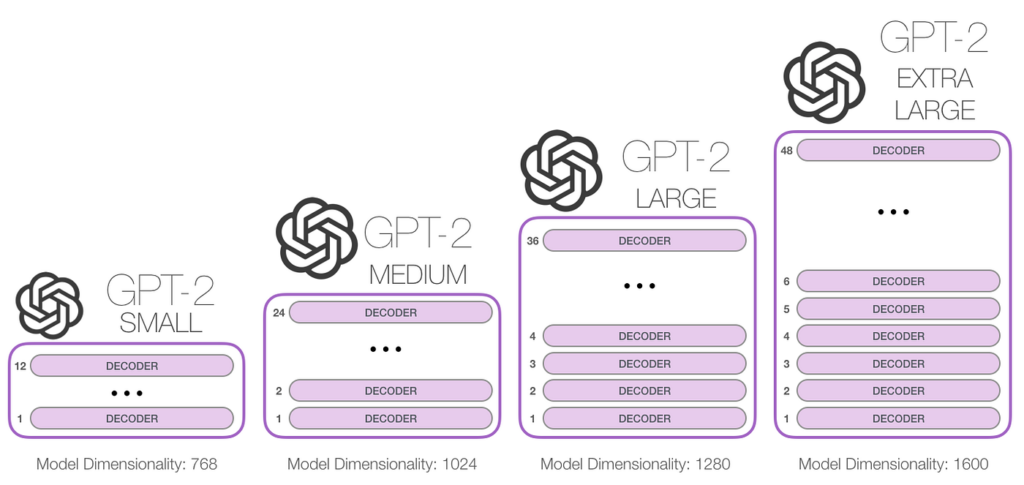

The subsequent iterations of GPT models brought exponential increases in scale and capability, fundamentally altering expectations for AI. GPT-2, released in 2019, built upon the success of its predecessor by dramatically increasing the model size and training data. This scaling led to unprecedented levels of coherence and fluency in text generation.

GPT-2 generated headlines for its ability to produce remarkably human-like text, leading OpenAI to initially withhold its full release due to concerns about misuse. Its prowess in zero-shot learning, performing tasks without explicit fine-tuning, demonstrated a profound understanding of language beyond mere pattern matching. This marked a significant leap forward in the evolution of GPT, showcasing the power of scale.

GPT-2: Unsupervised Learning and Zero-Shot Capabilities

With 1.5 billion parameters and trained on the WebText dataset, GPT-2 was a massive upgrade from GPT-1. Its training objective was simply to predict the next word, yet this simple task, when applied at scale, unlocked complex language understanding. The model could generate compelling articles, summaries, and even code snippets with remarkable accuracy and stylistic consistency.

The concept of zero-shot learning became a hallmark of GPT-2, illustrating that the model could generalize its understanding to new tasks without any specific examples or fine-tuning. This meant it could translate, summarize, or answer questions just by being prompted appropriately. The quality of its output was so high that it sparked widespread discussions about the ethical implications of advanced AI text generation.

GPT-3: The Leap to Few-Shot Learning and Massive Scale

The release of GPT-3 in 2020 represented another monumental leap, boasting an astounding 175 billion parameters – over 100 times more than GPT-2. This immense scale, combined with even larger and more diverse training data, unlocked truly remarkable few-shot learning capabilities. GPT-3 could perform a vast array of tasks with only a handful of examples, or even just a natural language instruction.

GPT-3 demonstrated an astonishing ability to understand and generate human language with unprecedented fluency and coherence across various domains. It could write creative fiction, generate functional code, draft emails, and even design user interfaces from simple text prompts. This paradigm shift cemented the idea that a single, sufficiently large language model could serve as a versatile foundation for countless ai applications.

The model’s ability to learn “in-context” from a few examples within the prompt itself, without any weight updates, was a game-changer. This adaptability made GPT-3 a powerful tool for developers and researchers, significantly accelerating innovation in AI-powered products and services. Its impact on the field of natural language processing was profound and lasting.

The Era of Advanced AI: GPT-4 and the Future of Generative Pre-trained Transformers

The trajectory of The Evolution of GPT: From GPT-1 to GPT-4 and Beyond continues with increasingly sophisticated models. GPT-4, launched in 2023, represents the pinnacle of current Generative Pre-trained Transformers, bringing significant advancements in reasoning, safety, and multimodality. This latest iteration is not just bigger, but fundamentally smarter and more capable in nuanced ways.

GPT-4 exhibits a deeper understanding of complex instructions and can handle more intricate tasks with greater accuracy and reliability. Its enhanced reasoning capabilities allow it to tackle problems requiring logical inference and abstract thought more effectively than its predecessors. The model’s improvements in safety and alignment also reflect a growing focus on responsible AI development.

GPT-4: Multimodality and Enhanced Reasoning

One of the most significant breakthroughs with GPT-4 is its multimodality, meaning it can process and understand not only text but also images. This allows users to input images and ask questions about them, opening up new avenues for interaction and application. For example, one could provide an image of a recipe and ask GPT-4 to list the ingredients or suggest modifications.

GPT-4’s performance on various professional and academic benchmarks, including passing the Uniform Bar Exam with a score in the top 10%, highlighted its superior reasoning abilities. OpenAI’s official announcement and detailed technical report on GPT-4 provide further insights into its capabilities and the rigorous testing it underwent. This model demonstrates a more nuanced understanding of context and intent, leading to more accurate and helpful responses across a wider range of queries.

The model also features improved steerability, allowing users to guide its behavior and tone more effectively, making it a more versatile tool for specific tasks. These advancements collectively push the boundaries of what AI can achieve, making GPT-4 a powerful assistant in many professional and creative endeavors. Its ability to process and generate diverse forms of content marks a new era for AI.

Beyond GPT-4: The Road Ahead

Looking beyond GPT-4, the future of Generative Pre-trained Transformers promises even more transformative developments. Researchers are actively working on improving efficiency, reducing computational costs, and developing more specialized models for niche applications. The ongoing pursuit of Artificial General Intelligence (AGI) remains a long-term goal, with each GPT iteration bringing us closer to that vision.

Key areas of future focus include enhancing factual accuracy, mitigating biases, and ensuring robust ethical alignment in increasingly powerful AI systems. The challenges of “hallucination,” where models generate plausible but incorrect information, are also a major area of research. As these models become more integrated into daily life, addressing these issues will be paramount for widespread adoption and trust.

The evolution of GPT is a continuous journey of innovation, with each new model building upon the strengths of its predecessors while introducing novel capabilities. We can anticipate future GPT models to be even more multimodal, capable of interacting with the world through various senses, and perhaps even engaging in more complex reasoning and problem-solving. The potential for these advanced AI systems to revolutionize industries and improve human capabilities is immense, promising an exciting future for generative AI.

For more in-depth technical details and insights into the latest advancements, you can explore the official OpenAI research page on GPT-4, which offers comprehensive documentation and analysis of its capabilities and limitations. The ongoing progress in this field continues to reshape our understanding of AI’s potential.