Back to main guide: Complete Guide To Gpt

The rise of large language models like GPT has revolutionized how we interact with technology, opening doors to unprecedented levels of creativity and productivity. However, merely typing a question into an AI isn’t always enough to get the precise, high-quality output you desire. This is where the crucial skill of Prompt Engineering comes into play, serving as the bridge between human intent and artificial intelligence capabilities. Mastering GPT for better results fundamentally depends on how effectively you communicate your needs to the model.

See also: Complete Guide to Gpt, What is GPT? A Beginner's Guide to Generative Pre-trained Transformers.Unlocking GPT’s Potential: What is Prompt Engineering?

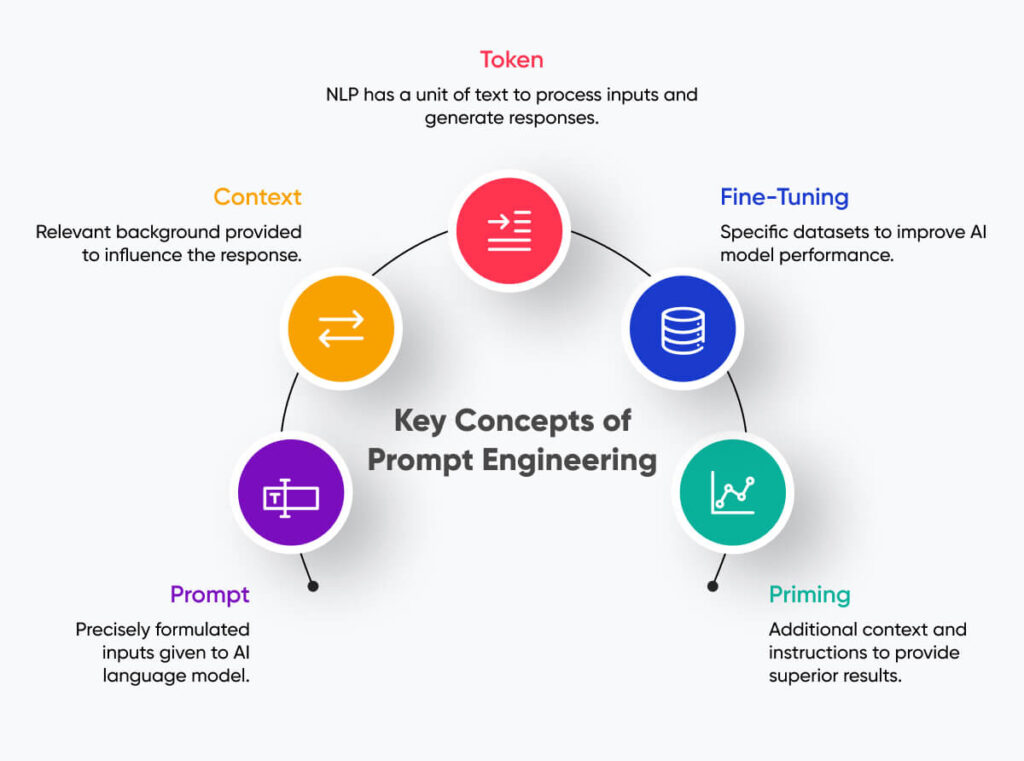

Prompt engineering is the art and science of designing effective inputs, or “prompts,” to guide ai models like GPT towards generating desired outputs. It involves understanding how these models process information and then structuring your requests in a way that maximizes their potential. This discipline transforms vague queries into precise, actionable instructions, ensuring the AI performs tasks exactly as intended.

Essentially, prompt engineering is about learning the language of AI to communicate more clearly and efficiently. It’s not just about typing a command; it’s about crafting a well-thought-out query that considers context, desired format, tone, and specific constraints. By doing so, you move beyond generic responses, unlocking GPT’s full power to produce highly relevant, accurate, and creative content tailored to your specific needs. This practice is vital for anyone looking to consistently achieve superior outcomes from their AI interactions.

Crafting Superior Prompts: Essential Techniques for GPT

Achieving consistently excellent results from GPT requires more than just Basic communication; it demands a strategic approach to prompt design. The journey to mastering GPT for better results begins with understanding and applying fundamental techniques that significantly enhance the quality of AI-generated content. These core principles form the bedrock of effective prompt engineering, guiding the model toward your objectives.

Be Specific and Clear

Prompt Engineering: Mastering Gpt For Better Results illustration" class="wp-image-949" />

Prompt Engineering: Mastering Gpt For Better Results illustration" class="wp-image-949" />Ambiguity is the enemy of good AI output. When crafting your prompts, clarity and specificity are paramount to ensure GPT understands exactly what you’re asking for. Avoid vague language and instead provide precise instructions, defining roles, formats, and desired outcomes. This meticulous approach minimizes misinterpretations and leads directly to more accurate and useful responses from the model.

* Define the AI’s Role: Tell GPT to “Act as an expert SEO copywriter” or “You are a seasoned financial analyst.” * Specify Output Format: Request “a bulleted list,” “a 500-word article,” or “a JSON object.” * Set the Tone: Instruct for a “professional,” “humorous,” or “concise” tone. * Indicate Length: Clearly state word count, sentence count, or paragraph count.

Provide Context and Constraints

Just like humans, AI performs better when it has sufficient background information and clear boundaries. Providing context helps GPT understand the scenario or problem you’re addressing, enabling it to generate more relevant and informed responses. Constraints, on the other hand, guide the AI away from undesirable outputs and keep it focused on the task at hand, which is crucial for mastering GPT for better results.

* Target Audience: Specify if the content is for “technical experts,” “general consumers,” or “students.” * Background Information: Include relevant facts, previous conversations, or necessary data points. * Negative Constraints: Tell GPT what not to do, e.g., “Do not use jargon,” or “Avoid clichés.” * Examples: Provide a few examples of the desired output style or format (few-shot prompting).

Use Iteration and Experimentation

Prompt engineering is rarely a one-shot process; it’s an iterative journey of refinement and discovery. The first prompt you write might not yield the perfect result, and that’s perfectly normal. Effective prompt engineers understand the value of testing different approaches, analyzing the outputs, and making adjustments based on what they learn.

This iterative feedback loop is essential for continually improving your prompts and, consequently, the quality of GPT’s responses. Don’t be afraid to experiment with different phrasing, add or remove context, or modify constraints. Each iteration brings you closer to mastering GPT for better results by fine-tuning your communication with the AI.

Beyond the Basics: Advanced Prompting for Optimal Outcomes

Once you’ve mastered the foundational techniques of prompt engineering, you can delve into more sophisticated strategies that unlock even greater potential from GPT. These advanced methods allow for more complex problem-solving, nuanced content generation, and ultimately, a much higher degree of control over the AI’s output. Embracing these techniques is key to truly mastering GPT for better results in demanding applications.

Few-Shot and Zero-Shot Learning

These concepts refer to how much guidance you provide to the model in terms of examples. Zero-shot learning involves providing no examples, relying solely on the prompt’s instructions. This works well for straightforward tasks where the model’s pre-trained knowledge is sufficient. Few-shot learning, however, involves providing a few examples within the prompt to demonstrate the desired input-output pattern, which can significantly improve performance for specific styles or formats.

For instance, if you want GPT to summarize text in a very particular style, giving it a couple of examples of text and their desired summaries can guide it effectively. This technique is particularly powerful for tasks requiring adherence to a unique format, tone, or specific data extraction rules, making it indispensable for achieving highly customized outcomes.

Chain-of-Thought Prompting

Chain-of-Thought (CoT) prompting is a groundbreaking technique that encourages GPT to “think step-by-step” before providing a final answer. Instead of simply asking for the solution, you prompt the model to articulate its reasoning process, breaking down complex problems into smaller, logical components. This method dramatically improves the accuracy and reliability of responses for intricate tasks that require multi-step reasoning.

By explicitly requesting intermediate reasoning steps, you allow GPT to process information more thoroughly, mimicking human problem-solving. This approach not only leads to more accurate answers but also makes the AI’s decision-making process more transparent. For a deeper dive into how this and other advanced techniques can transform your AI interactions, explore resources like the OpenAI research on language models, which often covers such advancements.

Role-Playing and Persona Assignment

Assigning a specific persona or role to GPT can dramatically influence the style, tone, and even the knowledge base it draws upon. By telling the AI to “Act as a seasoned marketing strategist” or “You are a friendly customer service representative,” you guide its output to align with the characteristics of that persona. This technique is invaluable for generating content that needs to resonate with a particular audience or reflect a specific brand voice.

This method allows you to tailor GPT’s responses to be more appropriate and effective for diverse communication needs. Whether you need content that is authoritative, empathetic, technical, or creative, persona assignment helps in mastering GPT for better results by ensuring the AI adopts the right voice and perspective for your specific requirements.

Mastering Prompt Engineering is an ongoing journey that combines technical understanding with creative problem-solving. By consistently applying these essential and advanced techniques, you can move beyond basic AI interactions to truly harness the power of GPT, generating superior, tailored, and highly effective results for any task. The more you practice and experiment, the more proficient you will become in guiding these powerful models to serve your exact needs.