The world of artificial intelligence has seen remarkable advancements, with Generative Pre-trained Transformers, universally known as GPT, standing at the forefront of this revolution. These sophisticated AI models have fundamentally reshaped how we interact with technology, demonstrating an astonishing ability to understand, generate, and process human language with unprecedented fluency. From automating routine tasks to powering innovative applications, understanding what GPT is and how it functions is crucial for anyone navigating the modern digital landscape. This article will delve into the core concepts of GPT, its inner workings, diverse applications, and the exciting, yet challenging, future it presents.

What is GPT? Defining Generative Pre-trained Transformers

GPT, an acronym for Generative Pre-trained Transformer, refers to a class of large language models designed to produce human-like text. The “Generative” aspect highlights its capability to create new content, whether it’s an email, an article, or even creative writing, rather than just classifying or summarizing existing information. This generative power allows GPT models to engage in dynamic conversations and fulfill complex linguistic requests.The “Pre-trained” component signifies that these models undergo extensive initial training on a massive dataset of text and code from the internet. This training phase allows the model to learn grammar, facts, reasoning abilities, and various writing styles, forming a broad understanding of language patterns. This foundational knowledge is what enables GPT to perform a wide array of natural language processing tasks effectively.

Finally, “Transformer” refers to the specific neural network architecture that underpins these models, pioneered by Google researchers in 2017. This architecture is particularly adept at handling sequential data like language, allowing the model to weigh the importance of different words in a sentence and understand context over long distances. This innovative design is a key reason for the remarkable success and capabilities of modern GPT models.

How GPT Models Work: The Core Technology Behind the Magic

At its heart, a GPT model operates using deep learning techniques, specifically a type of artificial neural network. These networks are trained to identify intricate patterns and relationships within vast amounts of text data. During its pre-training phase, the model processes billions of words, learning to predict the next word in a sentence based on the preceding context.

This predictive capability is honed through a process of “self-supervised learning,” where the model learns from the data itself without explicit human labeling for every piece of information. It essentially fills in missing words or predicts subsequent words, iteratively refining its internal parameters to minimize prediction errors. This intensive training builds a sophisticated internal representation of language.

Once pre-trained, a GPT model can then be fine-tuned for specific tasks, though modern iterations like GPT-3 and GPT-4 from OpenAI are often adept at “zero-shot” or “few-shot” learning, meaning they can perform new tasks with minimal to no additional examples. When given a prompt, the model generates text by selecting the most probable next word, token by token, until it forms a coherent and contextually relevant response. This iterative generation process is what makes GPT so versatile and seemingly intelligent.

Key Applications of GPT: Transforming Industries and Daily Life

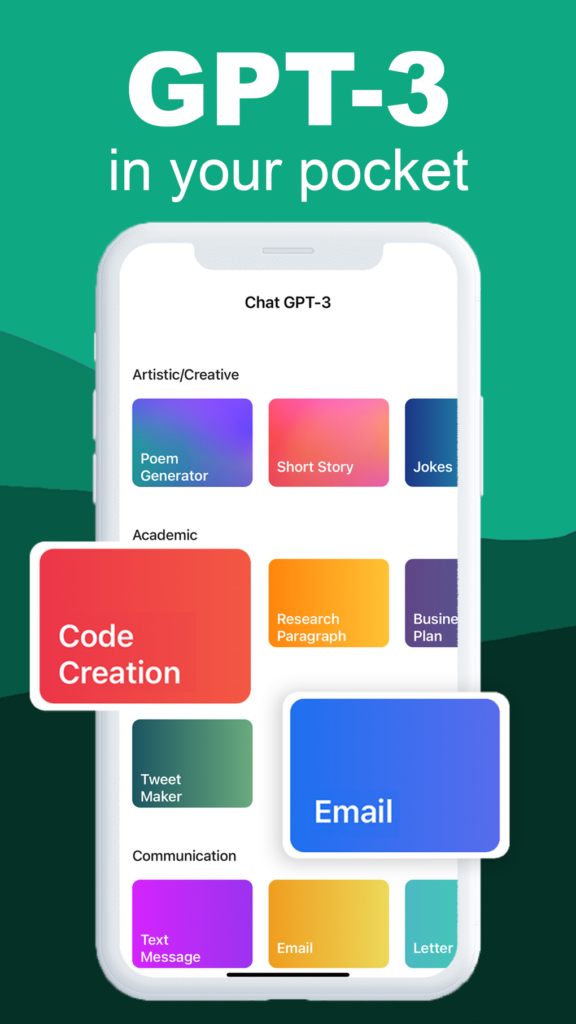

The versatility of GPT models has led to their adoption across numerous industries and everyday applications, fundamentally changing how we interact with information and technology. These AI systems are not just theoretical constructs; they are practical tools driving innovation and efficiency. From content creation to customer service, the impact of GPT is becoming increasingly pervasive.One of the most significant applications of GPT is in content generation, where it can rapidly produce articles, marketing copy, social media posts, and even creative stories. This capability empowers marketers, writers, and businesses to scale their content efforts, freeing up human creativity for more strategic tasks. It also aids in translation, summarization, and rephrasing text, making information more accessible and digestible.

Furthermore, GPT models are revolutionizing customer service through advanced chatbots and virtual assistants that can handle complex queries, provide personalized support, and even troubleshoot technical issues. In software Development, GPT assists engineers by generating code snippets, Debugging, and explaining complex programming concepts. The potential for these models to augment human capabilities across various professional domains is immense, promising increased productivity and new avenues for innovation. For a deeper dive into the capabilities and applications of these transformative models, you can explore resources like the Wikipedia article on Large Language Models, which provides comprehensive insights into their development and impact: Large Language Model on Wikipedia.

The Future of GPT: Potential, Challenges, and Ethical Considerations

The future of GPT models holds immense potential, with researchers constantly pushing the boundaries of what these AI systems can achieve. We can expect to see even more sophisticated models that are multimodal, meaning they can understand and generate not only text but also images, audio, and video, leading to more immersive and intuitive user experiences. Further advancements in reasoning, long-context understanding, and personalization will unlock new applications currently unimaginable.However, this rapid evolution also brings forth significant challenges that need careful consideration. Issues such as “hallucination,” where GPT models generate factually incorrect yet confidently stated information, remain a hurdle. Bias present in the training data can also be amplified by the model, leading to unfair or discriminatory outputs. Additionally, the computational resources and energy required to train and run these massive models pose environmental and accessibility concerns.

Ethical considerations are paramount as GPT technology becomes more integrated into society. Questions surrounding job displacement, the potential for misuse (e.g., generating misinformation or deepfakes), data privacy, and the need for transparency in AI decision-making require robust frameworks and ongoing public discourse. Ensuring that GPT development is guided by principles of fairness, accountability, and safety will be crucial for harnessing its benefits responsibly.

Frequently Asked Questions

What does GPT stand for?

GPT stands for Generative Pre-trained Transformer. Each part of the acronym describes a key characteristic of these AI models: “Generative” refers to their ability to create new text, “Pre-trained” indicates their extensive initial training on vast text datasets, and “Transformer” points to the specific neural network architecture they utilize. This combination allows them to process and generate human-like language effectively.Who developed GPT?

The most prominent and widely recognized series of GPT models, including GPT-3 and GPT-4, have been developed by OpenAI, an artificial intelligence research laboratory. OpenAI has been a leading force in advancing large language models and making them accessible through APIs and various applications, significantly contributing to the current AI landscape.Can GPT understand emotions?

While GPT models can process and generate text that expresses or references emotions, they do not “feel” or genuinely understand emotions in the human sense. They learn patterns in language associated with emotions from their training data and can simulate emotional responses or identify emotional tones in text. However, their understanding is purely statistical and based on linguistic correlations, not genuine subjective experience.What are the limitations of GPT?

Despite their impressive capabilities, GPT models have several limitations. They can sometimes generate factually incorrect information (hallucinations), reflect biases present in their training data, and lack true common sense or real-world understanding. They also have a limited “memory” or context window for conversations, can struggle with complex reasoning tasks, and their knowledge is capped at the date of their last training data update.Related Topics

- What Is Gpt? A Beginner's Guide To Generative Pre-trained Transformers

- Practical Applications Of Gpt In Business And Everyday Life

- Prompt Engineering: Mastering Gpt For Better Results

- The Evolution Of Gpt: From Gpt-1 To Gpt-4 And Beyond

- Ethical Considerations And Limitations Of Gpt Technology

- Integrating Gpt Into Your Workflow: Apis And Custom Solutions